The Kinode Book

Kinode is a decentralized operating system, peer-to-peer app framework, and node network designed to simplify the development and deployment of decentralized applications. It is also a sovereign cloud computer, in that Kinode can be deployed anywhere and act as a server controlled by anyone. Ultimately, Kinode facilitates the writing and distribution of software that runs on privately-held, personal server nodes or node clusters.

You are reading the Kinode Book, which is a technical document targeted at developers.

Read the Kinode Whitepaper here.

If you're a non-technical user:

- Learn about Kinode at the Kinode blog.

- Spin up a hosted node at Valet.

- Follow us on X.

- Join the conversation on our Discord or Telegram.

If you're a developer:

- Get your hands dirty with the Quick Start, or the more detailed My First Kinode Application tutorial.

- Learn how to boot a Kinode locally in the Installation section.

Quick Start

Run two fake Kinodes and chat between them

# Get Rust and `kit` Kinode development tools

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

cargo install --git https://github.com/kinode-dao/kit --locked

# Start two fake nodes, each in a new terminal on ports 8080 and 8081:

## First new terminal:

kit boot-fake-node

## Second new terminal:

kit boot-fake-node --home /tmp/kinode-fake-node-2 --port 8081 --fake-node-name fake2

# Back in the original terminal that is not running a fake node:

## Create and build a chat app from a template:

kit new my-chat-app

kit build my-chat-app

## Load the chat app into each node & start it:

kit start-package my-chat-app

kit start-package my-chat-app --port 8081

## Chat between the nodes:

kit inject-message my-chat-app:my-chat-app:template.os '{"Send": {"target": "fake2.dev", "message": "hello from the outside world"}}'

kit inject-message my-chat-app:my-chat-app:template.os '{"Send": {"target": "fake.dev", "message": "replying from fake2.dev using first method..."}}' --node fake2.dev

kit inject-message my-chat-app:my-chat-app:template.os '{"Send": {"target": "fake.dev", "message": "and second!"}}' -p 8081

# Or, from the terminal running one of the fake nodes:

## First fake node terminal:

m our@my-chat-app:my-chat-app:template.os '{"Send": {"target": "fake2.dev", "message": "hello world"}}'

## Second fake node terminal:

m our@my-chat-app:my-chat-app:template.os '{"Send": {"target": "fake.dev", "message": "wow, it works!"}}'

Next steps

The first chapter of the My First Kinode Application tutorial is a more detailed version of this Quick Start.

Alternatively, you can learn more about kit in the kit documentation.

If instead, you want to learn more about high-level concepts, start with the Introduction and work your way through the book in-order.

Introduction

The Kinode Book describes the Kinode operating system, both in conceptual and practical terms.

Kinode is a decentralized operating system, peer-to-peer app framework, and node network designed to simplify the development and deployment of decentralized applications. It is also a sovereign cloud computer, in that Kinode can be deployed anywhere and act as a server controlled by anyone. Ultimately, Kinode facilitates the writing and distribution of software that runs on privately-held, personal server nodes or node clusters.

Kinode eliminates boilerplate and reduces the complexity of p2p software development by providing four basic and necessary primitives:

| Primitive | Description |

|---|---|

| Networking | Passing messages from peer to peer. |

| Identity | Linking permanent system-wide identities to individual nodes. |

| Data Persistence | Storing data and saving it in perpetuity. |

| Global State | Reading shared global state (blockchain) and composing actions with this state (transactions). |

The focus of this book is how to build and deploy applications on Kinode.

Architecture Overview

Applications are composed of processes, which hold state and pass messages.

Kinode's kernel handles the startup and teardown of processes, as well as message-passing between processes, both locally and across the network.

Processes are programs compiled to Wasm, which export a single init() function.

They can be started once and complete immediately, or they can run "forever".

Peers in Kinode are identified by their onchain username in the "KNS": Kinode Name System, which is modeled after ENS. The modular architecture of the KNS allows for any Ethereum NFT, including ENS names themselves, to generate a unique Kinode identity once it is linked to a KNS entry.

Data persistence and blockchain access, as fundamental primitives for p2p apps, are built directly into the kernel. The filesystem is abstracted away from the developer, and data is automatically persisted across an arbitrary number of encrypted remote backups as configured at the user-system-level. Accessing global state in the form of the Ethereum blockchain is now trivial, with chain reads and writes handled by built-in system runtime modules.

Several other I/O primitives also come with the kernel: an HTTP server and client framework, as well as a simple key-value store. Together, these tools can be used to build performant and self-custodied full-stack applications.

Finally, by the end of this book, you will learn how to deploy applications to the Kinode network, where they will be discoverable and installable by any user with a Kinode.

Kimap and KNS

Kimap is an onchain namespace for the Kinode operating system. It serves as the base-level shared global state that all nodes use to share critical signaling data with the entire network. Kimap is organized as a hierarchical path system and has mutable and immutable keys.

Historically, discoverability of both peers and content has been a major barrier for peer-to-peer developers. Discoverability can present both social barriers (finding a new user on a game or chat) and technical obstacles (automatically acquiring networking information for a particular username). Many solutions have been designed to address this problem, but so far, the ``devex'' (developer experience) of deploying centralized services has continued to outcompete the p2p discoverability options available. Kimap aims to change this by providing a single, shared, onchain namespace that can be used to resolve to arbitrary elements of the Kinode network.

- All keys are strings containing exclusively characters 0-9, a-z (lowercase), - (hyphen) and are at maximum 63 characters long.

- A key may be one of two types, a name-key or a data-key.

- Every name-key may create sub-entries directly beneath it.

- Every name-key is an ERC-7211 NFT (non-fungible token), with a connected token-bound account2 with a counterfactual address.

- The implementation of the token-bound account may be set when a name-key is created.

- If the parent entry of a name-key has a token-bound account implementation set (a "gene"), then the name-key will automatically inherit this implementation.

- Every name-key may inscribe data in data-keys directly beneath it.

- A data-key may be mutable (a "note", prepended with

~) or immutable (a "fact", prepended with!).

https://eips.ethereum.org/EIPS/eip-721

https://ercs.ethereum.org/ERCS/erc-6551

See the Kinode whitepaper for a full specification which goes into detail regarding token-bound accounts, sub-entry management, the use of data keys, and protocol extensibility.

Kimap is tightly integrated into the operating system. At the runtime level, networking identities are verified against the kimap namespace. In userspace, programs such as the App Store make use of kimap by storing and reading data from it to define global state, such as apps available for download.

KNS: Kinode Name System

One of the most important features of a peer-to-peer network is the ability to maintain a unique and persistent identity.

This identity must be self-sovereign, unforgeable, and easy to discover by peers.

Kinode uses a PKI (public-key infrastructure) that runs within kimap to achieve this.

It should be noted that, in our system, the concepts of domain, identity, and username are identical and interchangeable.

Also important to understanding KNS identities is that other onchain identity protocols can be absorbed and supported by KNS. The KNS is not an attempt at replacing or competing with existing onchain identity primitives such as ENS and Lens. This has already been done for ENS protocol.

Kinode names are registered by a wallet and owned in the form of an NFT like any other kimap namespace entry. They contain metadata necessary to cover both:

- Domain provenance - to demonstrate that the NFT owner has provenance of a given Kinode identity.

- Domain resolution - to be able to route messages to a given identity on the Kinode network.

It's easy enough to check for provenance of a given Kinode identity. If you have a Kinode domain, you can prove ownership by signing a message with the wallet that owns the domain. However, to effectively use your Kinode identity as a domain name for your personal server, KNS domains have routing information, similar to a DNS record, that points to an IP address.

Domain Resolution

A KNS identity can either be direct or indirect.

When users first boot a node, they may decide between these two types as they create their initial identity.

Direct nodes share their literal IP address and port in their metadata, allowing other nodes to message them directly.

Again, this is similar to registering a WWW domain name and pointing it at your web server.

However, running a direct node is both technically demanding (you must maintain the ability of your machine to be accessed remotely) and a security risk (you must open ports on the server to the public internet).

Therefore, indirect nodes are the best choice for the majority of users that choose to run their own node.

Instead of sharing their IP and port, indirect nodes simply post a list of routers onchain. These routers are other direct nodes that have agreed to forward messages to indirect nodes. When a node wants to send a message to an indirect node, it first finds the node onchain, and then sends the message to one of the routers listed in the node's metadata. The router is responsible for forwarding the message to the indirect node and similarly forwarding messages from that node back to the network at large.

Specification Within Kimap

The definition of a node identity in the KNS protocol is any kimap entry that has:

- A

~net-keynote AND - Either:

a. A

~routersnote OR b. An~ipnote AND at least one of:~tcp-portnote~udp-portnote~ws-portnote~wt-portnote

Direct nodes are those that publish an ~ip and one or more of the port notes.

Indirect nodes are those that publish ~routers.

The data stored at ~net-key must be 32 bytes corresponding to an Ed25519 public key.

This is a node's signing key which is used across a variety of domains to verify ownership, including in the end-to-end encrypted networking protocol between nodes.

The owner of a namespace entry/node identity may rotate this key at any time by posting a transaction to kimap mutating the data stored at ~net-key.

The bytes at a ~routers entry must parse to an array of UTF-8 strings.

These strings should be node identities.

Each node in the array is treated by other participants in the networking protocol as a router for the parent entry.

Routers should themselves be direct nodes.

If a string in the array is not a valid node identity, or it is a valid node identity but not a direct one, that router will not be used by the networking protocol.

Further discussion of the networking protocol specification can be found here.

The bytes at an ~ip entry must be either 4 or 16 big-endian bytes.

A 4-byte entry represents a 32-bit unsigned integer and is interpreted as an IPv4 address.

A 16-byte entry represents a 128-bit unsigned integer and is interpreted as an IPv6 address.

Lastly, the bytes at any of the following port entries must be 2 big-endian bytes corresponding to a 16-bit unsigned integer:

~tcp-portsub-entry~udp-portsub-entry~ws-portsub-entry~wt-portsub-entry

These integers are translated to port numbers. In practice, port numbers used are between 9000 and 65535. Ports between 8000-8999 are usually saved for HTTP server use.

Design Philosophy

The following is a high-level overview of Kinode's design philosophy, along with the rationale for fundamental design choices.

Decentralized Software Requires a Shared Computing Environment

A single shared computing environment enables software to coordinate directly between users, services, and other pieces of software in a common language. Therefore, the best way to enable decentralized software is to provide an easy-to-use, general purpose node (that can run on anything from laptops to data centers) that runs the same operating system as all other nodes on the network. This environment must integrate with existing protocols, blockchains, and services to create a new set of protocols that operate peer-to-peer within the node network.

Decentralization is Broad

A wide array of companies and services benefit from some amount of decentralized infrastructure, even those operating in a largely centralized context. Additionally, central authority and centralized data are often essential to the proper function of a particular service, including those with decentralized properties. The Kinode environment must be flexible enough to serve the vast majority of the decentralization spectrum.

Blockchains are not Databases

To use blockchains as mere databases would negate their unique value. Blockchains are consensus tools, and exist in a spectrum alongside other consensus strategies such as Raft, lockstep protocols, CRDTs, and simple gossip. All of these are valid consensus schemes, and peer-to-peer software, such as that built on Kinode, must choose the correct strategy for a particular task, program, or application.

Decentralized Software Outcompetes Centralized Software through Permissionlessness and Composability

Therefore, any serious decentralized network must identify and prioritize the features that guarantee permissionless and composable development. Those features include:

- a persistent software environment (software can run forever once deployed)

- client diversity (more actors means fewer monopolies)

- perpetual backwards-compatibility

- a robust node network that ensures individual ownership of software and data

Decentralized Software Requires Decentralized Governance

The above properties are achieved by governance. Successful protocols launched on Kinode will be ones that decentralize their governance in order to maintain these properties. Kinode believes that systems that don't proactively specify their point of control will eventually centralize, even if unintentionally. The governance of Kinode itself must be designed to encourage decentralization, playing a role in the publication and distribution of userspace software protocols. In practice, this looks like an on-chain permissionless App Store.

Good Products Use Existing Tools

Kinode is a novel combination of existing technologies, protocols, and ideas. Our goal is not to create a new programming language or consensus algorithm, but to build a new execution environment that integrates the best of existing tools. Our current architecture relies on the following systems:

- ETH: a trusted execution layer

- Rust: a performant, expressive, and popular programming language

- Wasm: a portable, powerful binary format for executable programs

- Wasmtime: a standalone Wasm runtime

In addition, Kinode is inspired by the Bytecode Alliance and their vision for secure, efficient, and modular software. Kinode makes extensive use of their tools and standards.

Installation

This section will teach you how to get the Kinode core software, required to run a live node. After acquiring the software, you can learn how to run it and Join the Network.

- If you are just interested in starting development as fast as possible, skip to My First Kinode Application.

- If you want to run a Kinode without managing it yourself, use the Valet hosted service.

- If you want to make edits to the Kinode core software, see Build From Source.

Option 1: Download Binary (Recommended)

Kinode core distributes pre-compiled binaries for MacOS and Linux Debian derivatives, like Ubuntu.

First, get the software itself by downloading a precompiled release binary.

Choose the correct binary for your particular computer architecture and OS.

There is no need to download the simulation-mode binary — it is used behind the scenes by kit.

Extract the .zip file: the binary is inside.

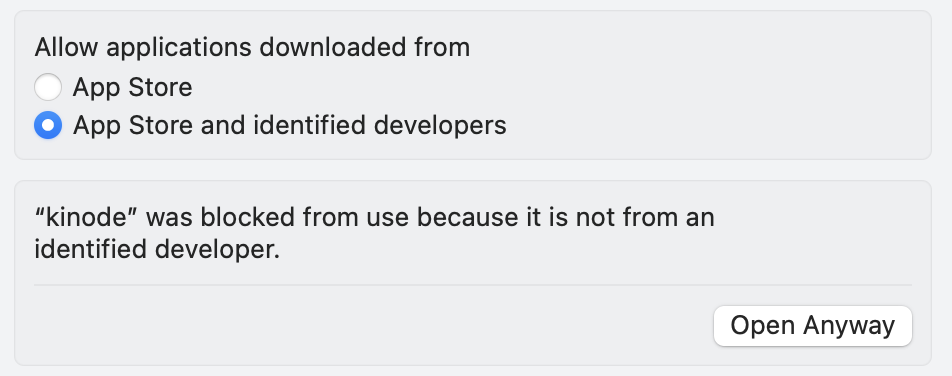

Note that some operating systems, particularly Apple, may flag the download as suspicious.

Apple

First, attempt to run the binary, which Apple will block.

Then, go to System Settings > Privacy and Security and click to Open Anyway for the kinode binary:

Option 2: Docker

Kinode can also be run using Docker. MacOS and Debian derivatives of Linux, like Ubuntu, are supported. Windows may work but is not officially supported.

Installing Docker

First, install Docker. Instructions will be different depending on your OS, but it is recommended to follow the method outlined in the official Docker website.

If you are using Linux, make sure to perform any post-install necessary afterwards. The official Docker website has optional post-install instructions.

Docker Image

The image expects a volume mounted at /kinode-home.

This volume may be empty or may contain another Kinode's data.

It will be used as the home directory of your Kinode.

Each volume is unique to each Kinode.

If you want to run multiple Kinodes, create multiple volumes.

The image includes EXPOSE directives for TCP port 8080 and TCP port 9000.

Port 8080 is used for serving the Kinode web dashboard over HTTP, and it may be mapped to a different port on the host.

Port 9000 is optional and is only required for a direct node.

If you are running a direct node, you must map port 9000 to the same port on the host and on your router.

Otherwise, your Kinode will not be able to connect to the rest of the network.

Run the following command to create a volume:

# Replace this variable with your node's intended name

export NODENAME=helloworld.os

docker volume create kinode-${NODENAME}

Then run the following command to create the container.

Replace kinode-${NODENAME} with the name of your volume if you prefer.

To map the port to a different port (for example, 80 or 6969), change 8080:8080 to PORT:8080, where PORT is the post on the host machine.

docker run -p 8080:8080 --rm -it --name kinode-${NODENAME} \

--mount type=volume,source=kinode-${NODENAME},destination=/kinode-home \

nick1udwig/kinode

which will launch your Kinode container attached to the terminal. Alternatively, you can run it detached:

docker run -p 8080:8080 --rm -dt --name kinode-${NODENAME} \

--mount type=volume,source=kinode-${NODENAME},destination=/kinode-home \

nick1udwig/kinode

Check the status of your Docker processes with docker ps.

To start and stop the container, use docker start kinode-${NODENAME} or docker stop kinode-${NODENAME}.

As long as the volume is not deleted, your data remains intact upon removal or stop. If you need further help with Docker, access the official Docker documentation here.

Option 3: Build From Source

You can compile the binary from source using the following instructions. This is only recommended if:

- The pre-compiled binaries don't work on your system and you can't use Docker for some reason, or

- You need to make changes to the Kinode core source.

Acquire Dependencies

If your system doesn't already have cmake and OpenSSL, download them:

Linux

sudo apt-get install cmake libssl-dev

Mac

brew install cmake openssl

Acquire Rust and various tools

Install Rust and some cargo tools, by running the following in your terminal:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

cargo install wasm-tools

rustup install nightly

rustup target add wasm32-wasip1 --toolchain nightly

cargo install cargo-wasi

For more information, or debugging, see the Rust lang install page.

Kinode uses the stable build of Rust, but the Wasm processes use the nightly build of Rust..

You will want to run the command rustup update on a regular basis to keep your version of the language current, especially if you run into issues compiling the runtime down the line.

You will also need to install NPM in order to build the Wasm processes that are bundled with the core binary.

Acquire Kinode core

Clone and set up the repository:

git clone https://github.com/kinode-dao/kinode.git

Build the packages that are bundled with the binary:

cargo run -p build-packages

Build the binary:

# OPTIONAL: --release flag

cargo build -p kinode

The resulting binary will be at path kinode/target/debug/kinode.

(Note that this is the binary crate inside the kinode workspace.)

You can also build the binary with the --release flag.

Building without --release will produce the binary significantly faster, as it does not perform any optimizations during compilation, but the node will run much more slowly after compiling.

The release binary will be at path kinode/target/release/kinode.

Join the Network

This page discusses joining the network with a locally-run Kinode. To instead join with a hosted node, see Valet.

These directions are particular to the Kinode beta release. Kinode is in active development on Optimism.

Starting the Kinode

Start a Kinode using the binary acquired in the previous section.

Locate the binary on your system (e.g., if you built source yourself, the binary will be in the repository at ./kinode/target/debug/kinode or ./kinode/target/release/kinode).

Print out the arguments expected by the binary:

$ ./kinode --help

A General Purpose Sovereign Cloud Computing Platform

Usage: kinode [OPTIONS] <home>

Arguments:

<home> Path to home directory

Options:

-p, --port <PORT>

Port to bind [default: first unbound at or above 8080]

--ws-port <PORT>

Kinode internal WebSockets protocol port [default: first unbound at or above 9000]

--tcp-port <PORT>

Kinode internal TCP protocol port [default: first unbound at or above 10000]

-v, --verbosity <VERBOSITY>

Verbosity level: higher is more verbose [default: 0]

-l, --logging-off

Run in non-logging mode (toggled at runtime by CTRL+L): do not write all terminal output to file in .terminal_logs directory

--reveal-ip

If set to false, as an indirect node, always use routers to connect to other nodes.

-d, --detached

Run in detached mode (don't accept input)

--rpc <RPC>

Add a WebSockets RPC URL at boot

--password <PASSWORD>

Node password (in double quotes)

--max-log-size <MAX_LOG_SIZE_BYTES>

Max size of all logs in bytes; setting to 0 -> no size limit (default 16MB)

--number-log-files <NUMBER_LOG_FILES>

Number of logs to rotate (default 4)

--max-peers <MAX_PEERS>

Maximum number of peers to hold active connections with (default 32)

--max-passthroughs <MAX_PASSTHROUGHS>

Maximum number of passthroughs serve as a router (default 0)

--soft-ulimit <SOFT_ULIMIT>

Enforce a static maximum number of file descriptors (default fetched from system)

--process-verbosity <JSON_STRING>

ProcessId: verbosity JSON object [default: ]

-h, --help

Print help

-V, --version

Print version

A home directory must be supplied — where the node will store its files.

The --rpc flag is an optional wss:// WebSocket link to an Ethereum RPC, allowing the Kinode to send and receive Ethereum transactions — used in the identity system as mentioned above.

If this is not supplied, the node will use a set of default RPC providers served by other nodes on the network.

If the --port flag is supplied, Kinode will attempt to bind that port for serving HTTP and will exit if that port is already taken.

If no --port flag is supplied, Kinode will bind to 8080 if it is available, or the first port above 8080 if not.

OPTIONAL: Acquiring an RPC API Key

Acquiring an RPC API Key

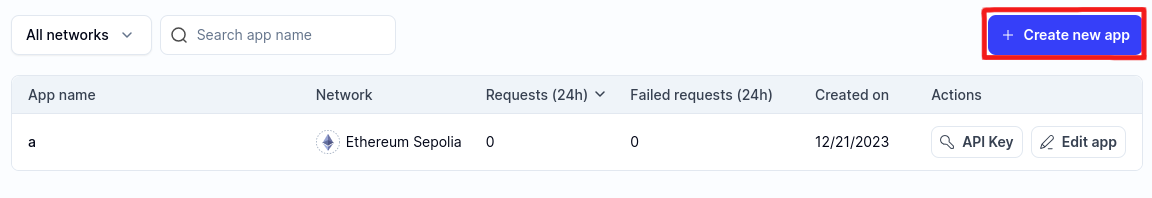

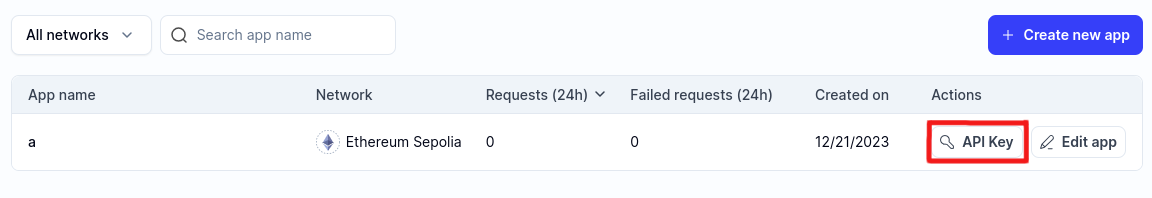

Create a new "app" on Alchemy for Optimism Mainnet.

Copy the WebSocket API key from the API Key button:

Alternative to Alchemy

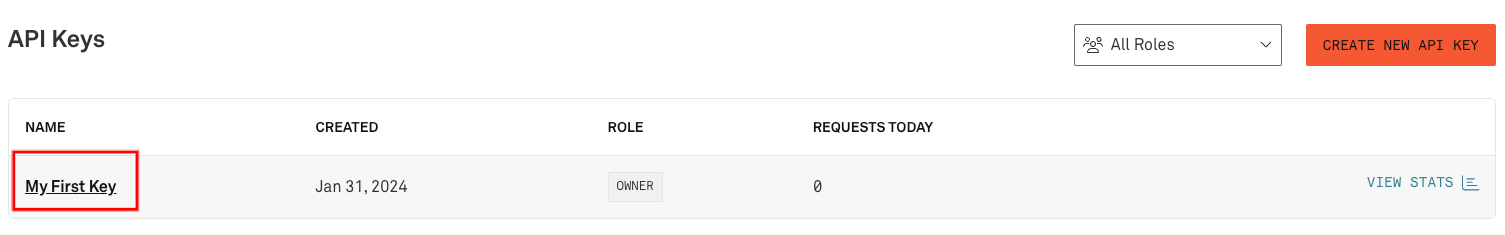

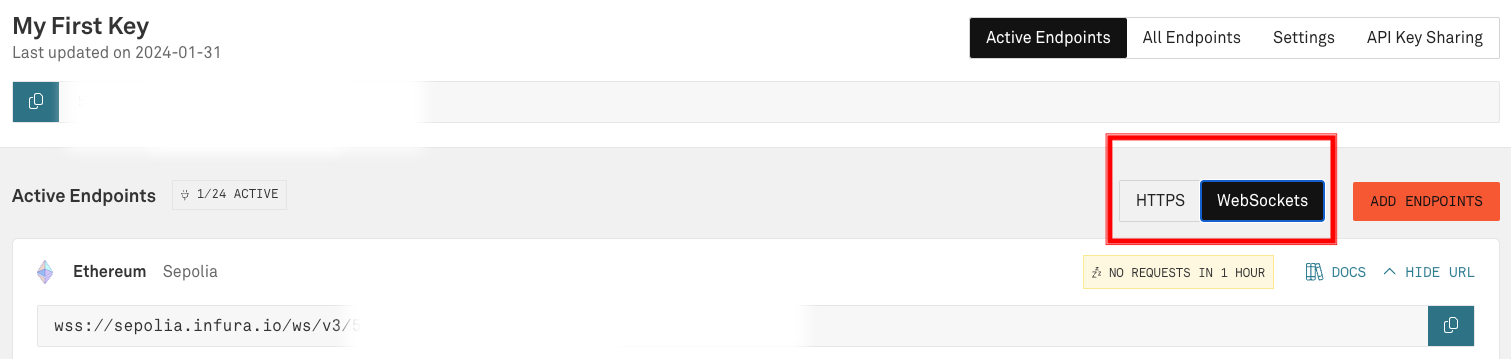

As an alternative to using Alchemy's RPC API key, Infura's endpoints may be used. Upon creating an Infura account, the first key is already created and titled 'My First Key'. Click on the title to edit the key.

Next, check the box next to Optimism "MAINNET". After one is chosen, click "SAVE CHANGES". Then, at the top, click "Active Endpoints".

On the "Active Endpoints" tab, there are tabs for "HTTPS" and "WebSockets". Select the WebSockets tab. Copy this endpoint and use it in place of the Alchemy endpoint in the following step, "Running the Binary".

Running the Binary

In a terminal window, run:

./kinode path/to/home

where path/to/home is the directory where you want your new node's files to be placed, or, if booting an existing node, is that node's existing home directory.

A new browser tab should open, but if not, look in the terminal for this line:

login or register at http://localhost:8080

and open that localhost address in a web browser.

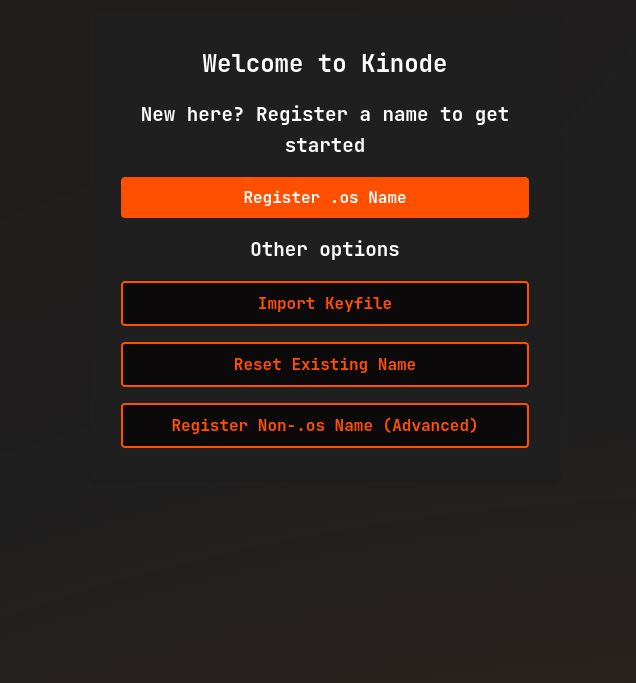

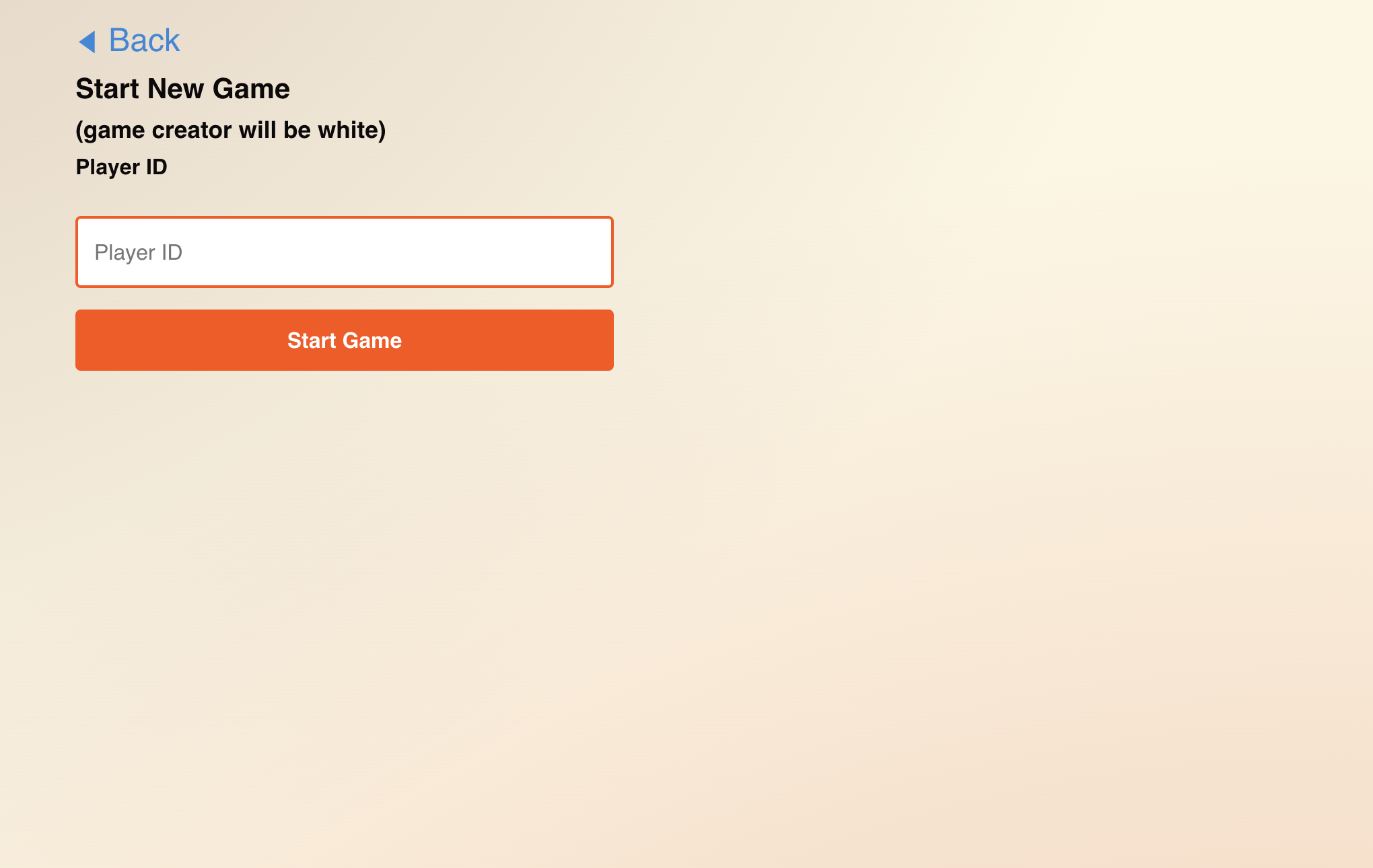

Registering an Identity

Next, register an identity.

Click Register .os Name.

If you've already got a wallet, proceed to Connecting the Wallet.

Otherwise, you're going to need to Acquire a Wallet.

Aside: Acquiring a Wallet

To register an identity, Kinode must send an Ethereum transaction, which requires ETH and a cryptocurrency wallet. While many wallets will work, the examples below use Metamask. Install Metamask here if you don't already have it.

Connecting the Wallet

After clicking Register .os Name, follow the prompts in the Connect a Wallet modal (if you haven't already connected a wallet):

Aside: Bridging ETH to Optimism

Bridge ETH to Optimism using the official bridge. Many exchanges also allow sending ETH directly to Optimism wallets.

Setting Up Networking (Direct vs. Routed Nodes)

When registering on Kinode, you may choose between running a direct or indirect (routed) node. Most users should use an indirect node. To do this, simply leave the box below name registration unchecked.

An indirect node connects to the network through a router, which is a direct node that serves as an intermediary, passing packets from sender to receiver. Routers make connecting to the network convenient, and so are the default. If you are connecting from a laptop that isn't always on, or that changes WiFi networks, use an indirect node.

A direct node connects directly, without intermediary, to other nodes (though they may, themselves, be using a router). Direct nodes may have better performance, since they remove middlemen from connections. Direct nodes also reduces the number of third parties that know about the connection between your node and your peer's node (if both you and your peer use direct nodes, there will be no third party involved).

Use an indirect node unless you are familiar with running servers. A direct node must be served from a static IP and port, since these are registered on the Ethereum network and are how other nodes will attempt to contact you.

Regardless, all packets, passed directly or via a router, are end-to-end encrypted. Only you and the recipient can read messages.

As a direct node, your IP is published on the blockchain. As an indirect node, only your router knows your IP.

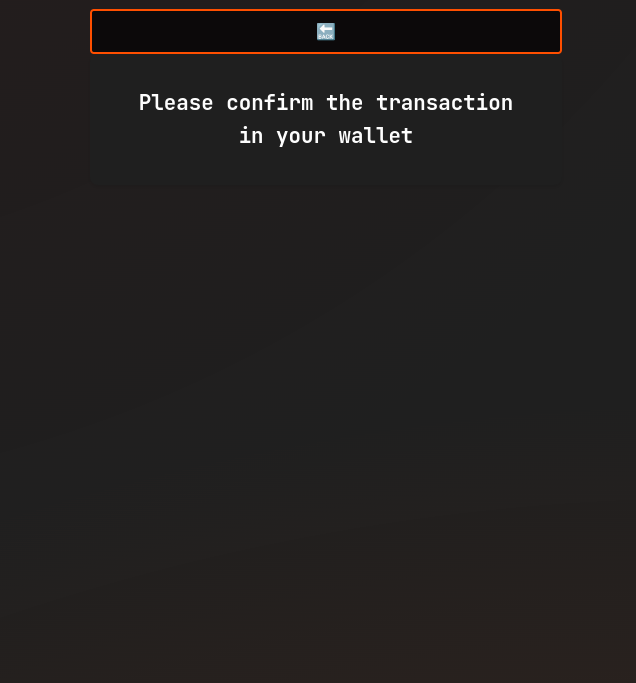

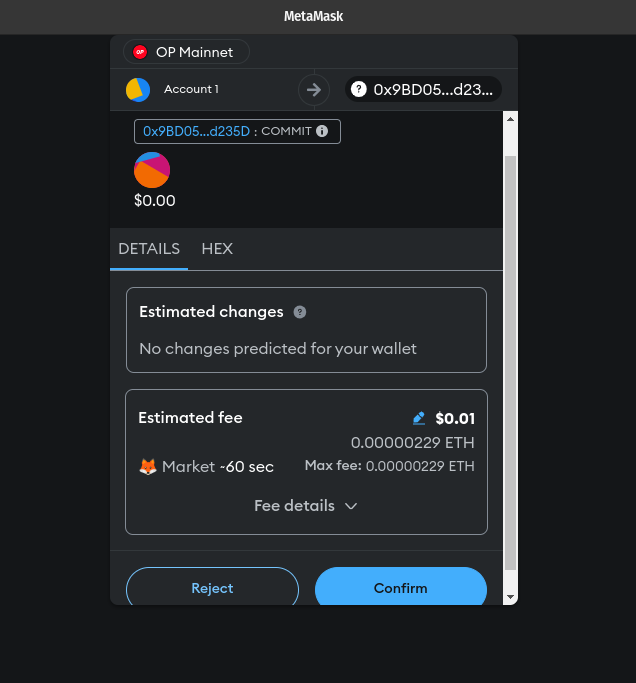

Sending the Registration Transaction

After clicking Register .os name, click through the wallet prompts to send the transaction:

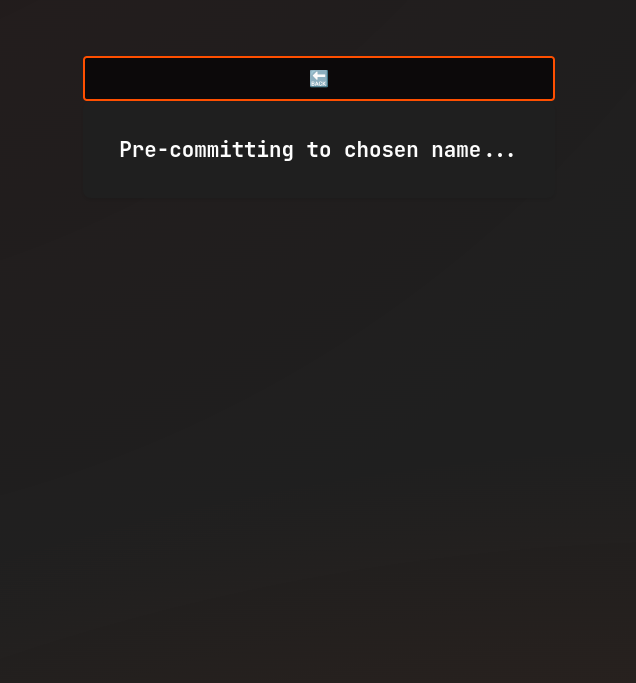

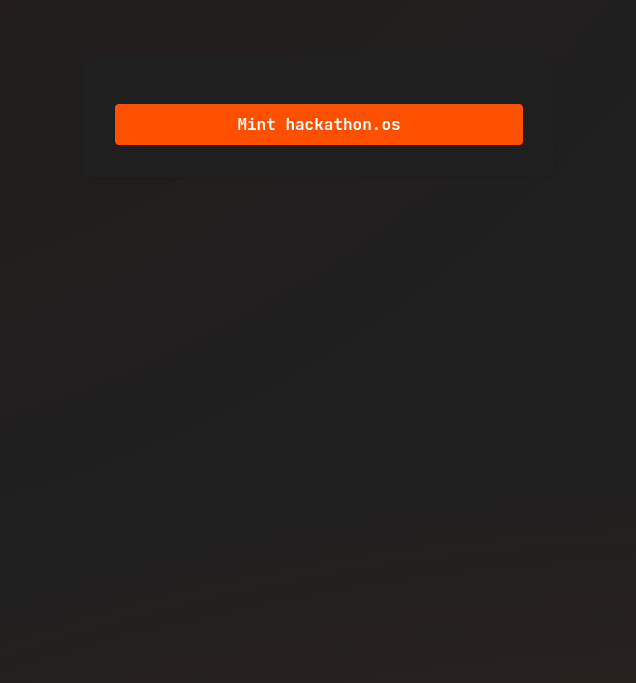

You'll see your node name being pre-committed, and then will send another transaction to mint:

What Does the Password Do?

Finally, you'll set your password.

The password encrypts the node's networking key. The networking key is how your node communicates securely with other nodes, and how other nodes can be certain that you are who you say you are.

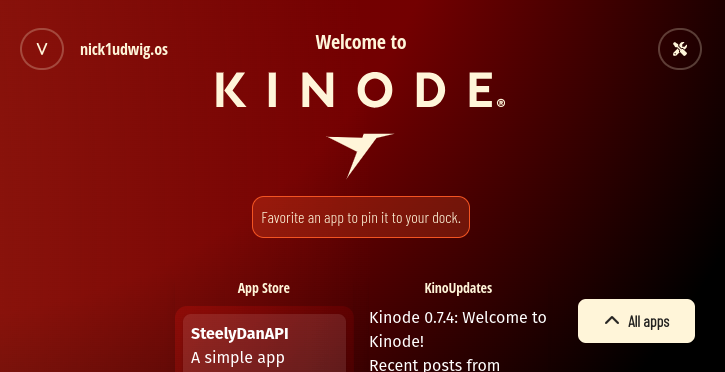

Welcome to the Network

After setting the node password, you will be greeted with the homepage.

Try downloading, installing, and using some apps on the App Store. Come ask for recommendations in the Kinode Discord!

System Components

This section describes the various components of the system, including the processes, networking protocol, public key infrastructure, HTTP server and client, files, databases, and terminal.

Processes

Processes are independent pieces of Wasm code running on Kinode. They can either be persistent, in which case they have in-memory state, or temporary, completing some specific task and returning. They have access to long-term storage, like the filesystem or databases. They can communicate locally and over the Kinode network. They can access the internet via HTTP or WebSockets. And these abilities can be controlled using a capabilities security model.

Process Semantics

Overview

On Kinode, processes are the building blocks for peer-to-peer applications. The Kinode runtime handles message-passing between processes, plus the startup and teardown of said processes. This section describes the message design as it relates to processes.

Each process instance has a globally unique identifier, or Address, composed of four elements.

- the publisher's node (containing a-z, 0-9,

-, and.) - the package name (containing a-z, 0-9, and

-) - the process name (containing a-z, 0-9, and

-). This may be a developer-selected string or a randomly-generated number as string. - the node the process is running on (often your node:

ourfor short).

A package is a set of one or more processes and optionally GUIs: a package is synonymous with an App and packages are distributed via the built-in App Store.

The way these elements compose is the following:

PackageIds look like:

[package-name]:[publisher-node]

my-cool-software:publisher-node.os

ProcessIds look like:

[process-name]:[package-name]:[publisher-node]

process-one:my-cool-software:publisher-node.os

8513024814:my-cool-software:publisher-node.os

Finally, Addresses look like:

[node]@[process-name]:[package-name]:[publisher-node]

some-user.os@process-one:my-cool-software:publisher-node.os

Processes are compiled to Wasm. They can be started once and complete immediately, or they can run forever. They can spawn other processes, and coordinate in arbitrarily complex ways by passing messages to one another.

Process State

Kinode processes can be stateless or stateful. In this case, state refers to data that is persisted between process instantiations. Nodes get turned off, intentionally or otherwise. The kernel handles rebooting processes that were running previously, but their state is not persisted by default.

Instead, processes elect to persist data, and what data to persist, when desired. Data might be persisted after every message ingested, after every X minutes, after a certain specific event, or never. When data is persisted, the kernel saves it to our abstracted filesystem, which not only persists data on disk, but also across arbitrarily many encrypted remote backups as configured at the user-system-level.

This design allows for ephemeral state that lives in-memory, or truly permanent state, encrypted across many remote backups, synchronized and safe.

Processes have access to multiple methods for persisting state:

- Saving a state object with the system calls available to every process, seen here.

- Using the virtual filesystem to read and write from disk, useful for persisting state that needs to be shared between processes.

- Using the SQLite or KV APIs to persist state in a database.

Requests and Responses

Processes communicate by passing messages, of which there are two kinds: Requests and Responses.

Addressing

When a Request or Response is received, it has an attached Address, which consists of: the source of the message, including the ID of the process that produced the Request, as well as the ID of the originating node.

The integrity of a source Address differs between local and remote messages.

If a message is local, the validity of its source is ensured by the local kernel, which can be trusted to label the ProcessId and node ID correctly.

If a message is remote, only the node ID can be validated (via networking keys associated with each node ID).

The ProcessId comes from the remote kernel, which could claim any ProcessId.

This is fine — merely consider remote ProcessIds a claim about the initiating process rather than an infallible ID like in the local case.

Please Respond

Requests can be issued at any time by a running process.

A Request can optionally expect a Response.

If it does, the Request will be retained by the kernel, along with an optional context object created by the Requests issuer.

A Request will be considered outstanding until the kernel receives a matching Response, at which point that Response will be delivered to the requester alongside the optional context.

contexts allow Responses to be disambiguated when handled asynchronously, for example, when some information about the Request must be used in handling the Response.

Responses can also be handled in an async-await style, discussed below.

Requests that expect a Response set a timeout value, after which, if no Response is received, the initial Request is returned to the process that issued it as an error.

Send errors are handled in processes alongside other incoming messages.

Inheriting a Response

If a process receives a Request, that doesn't mean it must directly issue a Response.

The process can instead issue Request(s) that "inherit" from the incipient Request, continuing its lineage.

If a Request does not expect a Response and also "inherits" from another Request, Responses to the child Request will be returned to the parent Requests issuer.

This allows for arbitrarily complex Request-Response chains, particularly useful for "middleware" processes.

There is one other use of inheritance, discussed below: passing data in Request chains cheaply.

Awaiting a Response

When sending a Request, a process can await a Response to that specific Request, queueing other messages in the meantime.

Awaiting a Response leads to easier-to-read code:

- The

Responseis handled in the next line of code, rather than in a separate iteration of the message-handling loop - Therefore, the

contextneed not be set.

The downside of awaiting a Response is that all other messages to a process will be queued until that Response is received and handled.

As such, certain applications lend themselves to blocking with an await, and others don't.

A rule of thumb is: await Responses (because simpler code) except when a process needs to performantly handle other messages in the meantime.

For example, if a file-transfer process can only transfer one file at a time, Requests can simply await Responses, since the only possible next message will be a Response to the Request just sent.

In contrast, if a file-transfer process can transfer more than one file at a time, Requests that await Responses will block others in the meantime; for performance it may make sense to write the process fully asynchronously, i.e. without ever awaiting.

The constraint on awaiting is a primary reason why it is desirable to spawn child processes.

Continuing the file-transfer example, by spawning one child "worker" process per file to be transferred, each worker can use the await mechanic to simplify the code, while not limiting performance.

There is more discussion of child processes here, and an example of them in action in the file-transfer cookbook.

Message Structure

Messages, both Requests and Responses, can contain arbitrary data, which must be interpreted by the process that receives it.

The structure of a message contains hints about how best to do this:

First, messages contain a field labeled body, which holds the actual contents of the message.

In order to cross the Wasm boundary and be language-agnostic, the body field is simply a byte vector.

To achieve composability between processes, a process should be very clear, in code and documentation, about what it expects in the body field and how it gets parsed, usually into a language-level struct or object.

A message also contains a lazy_load_blob, another byte vector, used for opaque, arbitrary, or large data.

lazy_load_blobs, along with being suitable location for miscellaneous message data, are an optimization for shuttling messages across the Wasm boundary.

Unlike other message fields, the lazy_load_blob is only moved into a process if explicitly called with (get_blob()).

Processes can thus choose whether to ingest a lazy_load_blob based on the body/metadata/source/context of a given message.

lazy_load_blobs hold bytes alongside a mime field for explicit process-and-language-agnostic format declaration, if desired.

See inheriting a lazy_load_blob for a discussion of why lazy loading is useful.

Lastly, messages contain an optional metadata field, expressed as a JSON-string, to enable middleware processes and other such things to manipulate the message without altering the body itself.

Inheriting a lazy_load_blob

The reason lazy_load_blobs are not automatically loaded into a process is that an intermediate process may not need to access the blob.

If process A sends a message with a blob to process B, process B can send a message that inherits to process C.

If process B does not attach a new lazy_load_blob to that inheriting message, the original blob from process A will be attached and accessible to C.

For example, consider again the file-transfer process discussed above.

Say one node, send.os, is transferring a file to another node, recv.os.

The process of sending a file chunk will look something like:

recv.ossends aRequestfor chunk Nsend.osreceives theRequestand itself makes aRequestto the filesystem for the piece of the filesend.osreceives aResponsefrom the filesystem with the piece of the file in thelazy_load_blob;send.ossends aResponsethat inherits the blob back torecv.oswithout itself having to load the blob, saving the compute and IO required to move the blob across the Wasm boundary.

This is the second functionality of inheritance; the first is discussed above: eliminating the need for bucket-brigading of Responses.

Errors

Messages that result in networking failures, like Requests that timeout, are returned to the process that created them as an error.

There are only two kinds of send errors: Offline and Timeout.

Offline means a message's remote target definitively cannot be reached.

Timeout is multi-purpose: for remote nodes, it may indicate compromised networking; for both remote and local nodes, it may indicate that a process is simply failing to respond in the required time.

A send error will return to the originating process the initial message, along with any optional context, so that the process can re-send the message, crash, or otherwise handle the failure as the developer desires.

If the error results from a Response, the process may optionally try to re-send a Response: it will be directed towards the original outstanding Request.

Capabilities

Processes must acquire capabilities from the kernel in order to perform certain operations. Processes themselves can also produce capabilities in order to give them to other processes. For more information about the general capabilities-based security paradigm, see the paper "Capability Myths Demolished".

The kernel gives out capabilities that allow a process to message another local process.

It also gives a capability allowing processes to send and receive messages over the network.

A process can optionally mark itself as public, meaning that it can be messaged by any local process regardless of capabilities.

See the capabilities chapter for more details.

Spawning child processes

A process can spawn "child" processes — in which case the spawner is known as the "parent". As discussed above, one of the primary reasons to write an application with multiple processes is to enable both simple code and high performance.

Child processes can be used to:

- Run code that may crash without risking crashing the parent

- Run compute-heavy code without blocking the parent

- Run IO-heavy code without blocking the parent

- Break out code that is more easily written with awaits to avoid blocking the parent

There is more discussion of child processes here, and an example of them in action in the file-transfer cookbook.

Conclusion

This is a high-level overview of process semantics. In practice, processes are combined and shared in packages, which are generally synonymous with apps.

Wasm and Kinode

It's briefly discussed here that processes are compiled to Wasm. The details of this are not covered in the Kinode Book, but can be found in the documentation for the Kinode runtime, which uses Wasmtime, a WebAssembly runtime, to load, execute, and provide an interface for the subset of Wasm components that are valid Kinode processes.

Wasm runs modules by default, or components, as described here: components are just modules that follow some specific format.

Kinode processes are Wasm components that have certain imports and exports so they can be run by Kinode.

Pragmatically, processes can be compiled using the kit tools.

The long term goal of Kinode is, using WASI, to provide a secure, sandboxed environment for Wasm components to make use of the kernel features described in this document. Further, Kinode has a Virtual File System (VFS) which processes can interact with to access files on a user's machine, and in the future WASI could also expose access to the filesystem for Wasm components directly.

Capability-Based Security

Capabilities are a security paradigm in which an ability that is usually handled as a permission (i.e. certain processes are allowed to perform an action if they are saved on an "access control list") are instead handled as a token (i.e. the process that possesses token can perform a certain action). These unforgeable tokens (as enforced by the kernel) can be passed to other owners, held by a given process, and checked for.

On Kinode, each process has an associated set of capabilities, which are each represented internally as an arbitrary JSON object with a source process:

#![allow(unused)] fn main() { pub struct Capability { pub issuer: Address, pub params: String, // JSON-string } }

The kernel abstracts away the process of ensuring that a capability is not forged. As a process developer, if a capability comes in on a message or is granted to you by the kernel, you are guaranteed that it is legitimate.

Runtime processes, including the kernel itself, the filesystem, and the HTTP client, issue capabilities to processes. Then, when a request is made by a process, the responder verifies the process's capability. If the process does not have the capability to make such a request, it will be denied.

To give a concrete example: the filesystem can read/write, and it has the capabilities for doing so. The FS may issue capabilities to processes to read/write to certain drives. A process can request to read/write somewhere, and then the FS checks if that process has the required capability. If it does, the FS does the read/write; if not, the request will be denied.

System level capabilities like the above can only be given when a process is first installed.

Startup Capabilities with manifest.json

When developing an application, manifest.json will be your first encounter with capabilties. With this file, capabilities are directly granted to a process on startup.

Upon install, the package manager (also referred to as "app store") surfaces these requested capabilities to the user, who can then choose to grant them or not.

Here is a manifest.json example for the chess app:

[

{

"process_name": "chess",

"process_wasm_path": "/chess.wasm",

"on_exit": "Restart",

"request_networking": true,

"request_capabilities": [

"net:distro:sys"

],

"grant_capabilities": [

"http-server:distro:sys"

],

"public": true

}

]

By setting request_networking: true, the kernel will give it the "networking" capability. In the request_capabilities field, chess is asking for the capability to message net:distro:sys.

Finally, in the grant_capabilities field, it is giving http-server:distro:sys the ability to message chess.

When booting the chess app, all of these capabilities will be granted throughout your node.

If you were to print out chess' capabilities using kinode_process_lib::our_capabilities() -> Vec<Capability>, you would see something like this:

#![allow(unused)] fn main() { [ // obtained because of `request_networking: true` Capability { issuer: "our-node.os@kernel:distro:sys", params: "\"network\"" }, // obtained because we asked for it in `request_capabilities` Capability { issuer: "our-node.os@net:distro:sys", params: "\"messaging\"" } ] }

Note that userspace capabilities, those created by other processes, can also be requested in a package manifest, though it's not guaranteed that the user will have installed the process that can grant the capability.

Therefore, when a userspace process uses the capabilities system, it should have a way to grant capabilities through its body protocol, as described below.

Userspace Capabilities

While the manifest fields are useful for getting a process started, it is not sufficient for creating and giving custom capabilities to other processes.

To create your own capabilities, simply declare a new one and attach it to a Request or Response like so:

#![allow(unused)] fn main() { let my_new_cap = kinode_process_lib::Capability::new(our, "\"my-new-capability\""); Request::new() .to(a_different_process) .capabilities(vec![my_new_cap]) .send(); }

On the other end, if a process wants to save and reuse that capability, they can do something like this:

#![allow(unused)] fn main() { kinode_process_lib::save_capabilities(req.capabilities); }

This call will automatically save the caps for later use.

Next time you attach this capability to a message, whether that is for authentication with the issuer, or to share it with another process, it will reach the other side just fine, and they can check it using the exact same flow.

For a code example of creating and using capabilities in userspace, see this cookbook recipe.

Startup, Spindown, and Crashes

Along with learning how processes communicate, understanding the lifecycle paradigm of Kinode processes is essential to developing useful p2p applications.

Recall that a 'package' is a userspace construction that contains one or more processes.

The Kinode kernel is only aware of processes.

When a process is first initialized, its compiled Wasm code is loaded into memory and, if the code is valid, the process is added to the kernel's process table.

Then, the kernel starts the process by calling the init() function (which is common to all processes).

This scenario is identical to when a process is re-initialized after being shut down. From the perspective of both the kernel and the process code, there is no difference.

Defining Exit Behavior

In the capabilities chapter, we saw manifest.json used to request and grant capabilities to a process. Another field in the manifest is on_exit, which defines the behavior of the process when it exits.

There are three possible behaviors for a process when it exits:

-

OnExit::None- The process is not restarted and nothing happens. -

OnExit::Restart- The process is restarted. -

OnExit::Requests- The process is not restarted, and a list of requests set by the process are fired off. These requests have thesourceandcapabilitiesof the exiting process.

Once a process has been initialized it can exit in 4 ways:

- Process code executes to completion --

init()returns. - Process code panics for any reason.

- The kernel shuts it down via

KillProcesscall. - The runtime shuts it down via graceful exit or crash.

In the event of a runtime exit the process often is best suited by restarting on the next boot. But this should be optional. This is the impetus for OnExit::Restart and OnExit::None. However, OnExit::Requests is also useful in this case, as the process can notify the appropriate services (which restarted, most likely) that it has exited.

If a process is killed by the kernel, it doesn't make sense to honor OnExit::Restart. This would reduce the strength of KillProcess and forces a full package uninstall to get it to stop running. Therefore, OnExit::Restart is treated as OnExit::None in this case only.

NOTE: If a process crashes for a 'structural' reason, i.e. the process code leads directly to a panic, and uses OnExit::Restart, it will crash continuously until it is uninstalled or killed manually.

Be careful of this!

The kernel waits an exponentially-increasing time between process restarts to avoid DOSing iteself with a crash-looping process.

If a process executes to completion, its exit behavior is always honored.

Thus we can rewrite the three possible OnExit behaviors with their full accurate logic:

-

OnExit::None- The process is not restarted and nothing happens -- no matter what. -

OnExit::Restart- The process is restarted, unless it was killed by the kernel, in which case it is treated asOnExit::None. -

OnExit::Requests- The process is not restarted, and a list ofRequests set by the process are fired off. TheseRequests have thesourceandcapabilitiesof the exiting process. If the target process of a givenRequestin the list is no longer running, theRequestwill be dropped.

Implications

Here are some good practices for working with these behaviors:

-

When a process has

OnExit::Restartas its behavior, it should be written in such a way that it can restart at any time. This means that theinit()function should start by picking up where the process may have left off, for example, reading from a local database that the process uses, or re-establishing an ETH RPC subscription (and making sure toget_logsfor any events that may have been missed!). -

Processes that produce 'child' processes should handle the exit behavior of those children. A parent process should usually use

OnExit::Restartas its behavior unless it intends to hand off the child processes to another process via some established API. A child process can useNone,Restart, orRequests, depending on its needs. -

If a child process uses

OnExit::None, the parent must be aware that the child could exit at any time and not notify the parent. This can be fine and easy to deal with if the parent has outstandingRequests to the child and can assume failure on timeout, or if the work-product of the child is irrelevant to the continued operations of the parent. -

If a child process uses

OnExit::Restart, the parent must be aware that the child will persist itself indefinitely. This is a natural fit for long-lived child processes which engage in cross-network activity and are themselves presenting a useful API. However, likeOnExit::None, the parent will not be notified if the child process is manually killed. Again, the parent should be programmed to consider this. -

If a child process uses

OnExit::Requests, it has the ability to notify the parent process when it exits. This is quite useful for child processes that create a work-product to return to the parent or if it is important that the parent do some action immediately upon the child's exit. Note that the programmer must create theRequests in the child process. They can target any process, as long as the child process has the capability to message that target. The target will often simply be the parent process. -

Requests made in

OnExit::Requestsmust also comport to the capabilities requirements that applied to the process when it was alive. -

If your processes does not have any places that it can panic, you don't have to worry about crash behavior, Rust is good for this :)

-

Parent processes "kill" child processes by building in a

Requesttype that the child will respond to by exiting, which the parent can then send. The kernel does not actually have any conception of hierarchical process relationships. The actual kernelKillProcesscommand requires root capabilities to use, and it is unlikely that your app will acquire those.

Persisting State With Processes

Given that Kinodes can, comporting with the realities of the physical world, be turned off, a well-written process must withstand being shut down and re-initialized at any time.

This raises the question: how does a process persist information between initializations?

There are two ways: either the process can use the built-in set_state and get_state functions, or it can send data to a process that does this for them.

The first option is a maximally-simple way to write some bytes to disk (where they'll be backed up, if the node owner has configured that behavior). The second option is vastly more general, because runtime modules, which can be messaged directly from custom userspace processes, offer any number of APIs. So far, there are three modules built into Kinode that are designed for persisted data: a filesystem, a key-value store, and a SQLite database.

Each of these modules offer APIs accessed via message-passing and write data to disk. Between initializations of a process, this data remains saved, even backed up. The process can then retrieve this data when it is re-initialized.

Extensions

Extensions supplement and compliment Kinode processes. Kinode processes have many features that make them good computational units, but they also have constraints. Extensions remove the constraints (e.g., not all libraries can be built to Wasm) while maintaining the advantages (e.g., the integration with the Kinode Request/Response system). The cost of extensions is that they are not as nicely bundled within the Kinode system: they must be run separately.

What is an Extension?

Extensions are WebSocket clients that connect to a paired Kinode process to extend library, language, or hardware support.

Kinode processes are Wasm components, which leads to advantages and disadvantages. The rest of the book (and in particular the processes chapter) discusses the advantages (e.g., integration with the Kinode Request/Response system and the capabilities security model). Two of the main disadvantages are:

- Only certain libraries and languages can be used.

- Hardware accelerators like GPUs are not easily accessible.

Extensions solve both of these issues, since an extension runs natively. Any language with any library supported by the bare metal host can be run as long as it can speak WebSockets.

Downsides of Extensions

Extensions enable use cases that pure processes lack. However, they come with a cost. Processes are contained and managed by your Kinode, but extensions are not. Extensions are independent servers that run alongside your Kinode. They do not yet have a Kinode-native distribution channel.

As such, extensions should only be used when absolutely necessary. Processes are more stable, maintainable, and easily upgraded. Only write an extension if there is no other choice.

How to Write an Extension?

An extension is composed of two parts: a Kinode package and the extension itself. They communicate with each other over a WebSocket connection that is managed by Kinode. Look at the Talking to the Outside World recipe for an example. The examples below show some more working extensions.

The WebSocket protocol

The process binds a WebSocket, so Kinode acts as the WebSocket server. The extension acts as a client, connecting to the WebSocket served by the Kinode process.

The process sends HttpServerAction::WebSocketExtPushOutgoing Requests to the http-server(look here and here) to communicate with the extension (see the enum defined at the bottom of this section).

Table 1: HttpServerAction::WebSocketExtPushOutgoing Inputs

| Field Name | Description |

|---|---|

channel_id | Given in a WebSocket message after a client connects. |

message_type | The WebSocketMessage type — recommended to be WsMessageType::Binary. |

desired_reply_type | The Kinode MessageType type that the extension should return — Request or Response. |

The lazy_load_blob is the payload for the WebSocket message.

The http-server converts the Request into a HttpServerAction::WebSocketExtPushData, MessagePacks it, and sends it to the extension.

Specifically, it attaches the Message's id, copies the desired_reply_type to the kinode_message_type field, and copies the lazy_load_blob to the blob field.

The extension replies with a MessagePacked HttpServerAction::WebSocketExtPushData.

It should copy the id and kinode_message_type of the message it is serving into those same fields of the reply.

The blob is the payload.

#![allow(unused)] fn main() { pub enum HttpServerAction { //... /// When sent, expects a `lazy_load_blob` containing the WebSocket message bytes to send. /// Modifies the `lazy_load_blob` by placing into `WebSocketExtPushData` with id taken from /// this `KernelMessage` and `kinode_message_type` set to `desired_reply_type`. WebSocketExtPushOutgoing { channel_id: u32, message_type: WsMessageType, desired_reply_type: MessageType, }, /// For communicating with the ext. /// Kinode's http-server sends this to the ext after receiving `WebSocketExtPushOutgoing`. /// Upon receiving reply with this type from ext, http-server parses, setting: /// * id as given, /// * message type as given (Request or Response), /// * body as HttpServerRequest::WebSocketPush, /// * blob as given. WebSocketExtPushData { id: u64, kinode_message_type: MessageType, blob: Vec<u8>, }, //... } }

The Package

The package is, minimally, a single process that serves as interface between Kinode and the extension. Each extension must come with a corresponding Kinode package.

Specifically, the interface process must:

- Bind an extension WebSocket: this will be used to communicate with the extension.

- Handle Kinode messages: e.g., Requests to be passed to the extension for processing.

- Handle WebSocket messages: these will come from the extension.

'Interface process' will be used interchangeably with 'package' throughout this page.

Bind an Extension WebSocket

The kinode_process_lib provides an easy way to bind an extension WebSocket:

kinode_process_lib::http::bind_ext_path("/")?;

which, for a process with process ID process:package:publisher.os, serves a WebSocket server for the extension to connect to at ws://localhost:8080/process:package:publisher.os.

Passing a different endpoint like bind_ext_path("/foo") will append to the WebSocket endpoint like ws://localhost:8080/process:package:publisher.os/foo.

Handle Kinode Messages

Like any Kinode process, the interface process must handle Kinode messages. These are how other Kinode processes will make Requests that are served by the extension:

- Process A sends Request.

- Interface process receives Request, optionally does some logic, sends Request on to extension via WS.

- Extension does computation, replies on WS.

- Interface process receives Response, optionally does some logic, sends Response on to process A.

The WebSocket protocol section above discusses how to send messages to the extension over WebSockets.

Briefly, a HttpServerAction::WebSocketExtPushOutgoing Request is sent to the http-server, with the payload in the lazy_load_blob.

It is recommended to use the following protocol:

- Use the

WsMessageType::BinaryWebSocket message type and use MessagePack to (de)serialize your messages. MessagePack is space-efficient and well supported by a variety of languages. Structs, dictionaries, arrays, etc. can be (de)serialized in this way. The extension must support MessagePack anyways, since theHttpServerAction::WebSocketExtPushDatais (de)serialized using it. - Set

desired_reply_typetoMessageType::Responsetype. Then the extension can indicate its reply is a Response, which will allow your Kinode process to properly route it back to the original requestor. - If possible, the original requestor should serialize the

lazy_load_blob, and the type oflazy_load_blobshould be defined accordingly. Then, all the interface process needs to do isinheritthelazy_load_blobin itshttp-serverRequest. This increases efficiency since it avoids bringing those bytes across the Wasm boundry between the process and the runtime (see more discussion here).

Handle WebSocket Messages

At a minimum, the interface process must handle:

Table 2: HttpServerRequest Variants

HttpServerRequest variant | Description |

|---|---|

WebSocketOpen | Sent when an extension connects. Provides the channel_id of the WebSocket connection, needed to message the extension: store this! |

WebSocketClose | Sent when the WebSocket closes. A good time to clean up the old channel_id, since it will no longer be used. |

WebSocketPush | Used for sending payloads between interface and extension. |

Although the extension will send a HttpServerAction::WebSocketExtPushData, the http-server converts that into a HttpServerRequest::WebSocketPush.

The lazy_load_blob then contains the payload from the extension, which can either be processed in the interface or inherited and passed back to the original requestor process.

The Extension

The extension is, minimally, a WebSocket client that connects to the Kinode interface process. It can be written in any language and it is run natively on the host as a "side car" — a separate binary.

The extension should first connect to the interface process.

The recommended pattern is to then iteratively accept and process messages from the WebSocket.

Messages come in as MessagePack'd HttpServerAction::WebSocketExtPushData and must be replied to in the same format.

The blob field is recommended to also be MessagePack'd.

The id and kinode_message_type should be mirrored by the extension: what it receives in those fields should be copied in its reply.

Examples

Find some working examples of runtime extensions below:

WIT APIs

This document describes how Kinode processes use WIT to export or import APIs at a conceptual level. If you are interested in usage examples, see the Package APIs recipe.

High-level Overview

Kinode runs processes that are WebAssembly components, as discussed elsewhere. Two key advantages of WebAssembly components are

- The declaration of types and functions using the cross-language Wasm Interface Type (WIT) language

- The composibility of components. See discussion here.

Kinode processes make use of these two advantages.

Processes within a package — a group of processes, also referred to as an app — may define an API in WIT format.

Each process defines a WIT interface; the package defines a WIT world.

The API is published alongside the package.

Other packages may then import and depend upon that API, and thus communicate with the processes in that package.

The publication of the API also allows for easy inspection by developers or by machines, e.g., LLM agents.

More than types can be published. Because components are composable, packages may publish, along with the types in their API, library functions that may be of use in interacting with that package. When set as as a dependency, these functions will be composed into new packages. Libraries unassociated with packages can also be published and composed.

WIT for Kinode

The following is a brief discussion of the WIT language for use in writing Kinode package APIs. A more full discussion of the WIT language is here.

Conventions

WIT uses kebab-case for multi-word variable names.

WIT uses // C-style comments.

Kinode package APIs must be placed in the top-level api/ directory.

They have a name matching the PackageId and appended with a version number, e.g.,

$ tree chat

chat

├── api

│ └── chat:template.os-v0.wit

...

What WIT compiles into

WIT compiles into types of your preferred language.

Kinode currently recommends Rust, but also supports Python and Javascript, with plans to support C/C++ and JVM languages like Java, Scala, and Clojure.

You can see the code generated by your WIT file using the wit-bindgen CLI.

For example, to generate the Rust code for the app-store API in Kinode core, use, e.g.,

kit b app-store

wit-bindgen rust -w app-store-sys-v1 --generate-unused-types --additional_derive_attribute serde::Deserialize app-store/app-store/target/wit

In the case of Rust, kebab-case WIT variable names become UpperCamelCase.

Rust derive macros can be applied to the WIT types in the wit_bindgen::generate! macro that appears in each process.

A typical macro invocation looks like

#![allow(unused)] fn main() { wit_bindgen::generate!({ path: "target/wit", world: "chat-template-dot-os-v0", generate_unused_types: true, additional_derives: [serde::Deserialize, serde::Serialize, process_macros::SerdeJsonInto], }); }

where the field of interest here is the additional_derives.

Types

The built-in types of WIT closely mirror Rust's types, with the exception of sets and maps.

Users can define struct-like types called records, and enum-like types called variants.

Users can also define funcs with function signatures.

Interfaces

interfaces define a set of types and functions and, in Kinode, are how a process signals its API.

Worlds

worlds define a set of imports and exports and, in Kinode, correspond to a package's API.

They can also include other worlds, copying that worlds imports and exports.

An export is an interface that a package defines and makes available, while an import is an interface that must be made available to the package.

If an interface contains only types, the presence of the WIT file is enough to provide that interface: the types can be generated from the WIT file.

However, if an imported interface contains funcs as well, a Wasm component is required that exports those functions.

For example, consider the chat template's test/ package (see kit installation instructions):

kit n chat

cat chat/test/chat_test/api/*

cat chat/api/*

Here, chat-template-dot-os-v0 is the test/ package world.

It imports types from interfaces defined in two other WIT files: the top-level chat as well as tester.

Networking Protocol

1. Protocol Overview and Motivation

The Kinode networking protocol is designed to be performant, reliable, private, and peer-to-peer, while still enabling access for nodes without a static public IP address.

The networking protocol is NOT designed to be all-encompassing, that is, the only way that two Kinodes will ever communicate. Many Kinode runtimes will provide userspace access to HTTP server/client capabilities, TCP sockets, and much more. Some applications will choose to use such facilities to communicate. This networking protocol is merely a common language that every Kinode is guaranteed to speak. For this reason, it is the protocol on which system processes will communicate, and it will be a reasonable default for most applications.

In order for nodes to attest to their identity without any central authority, all networking information is made available onchain. Networking information can take two forms: direct or routed. The former allows for completely direct peer-to-peer connections, and the latter allows nodes without a physical network configuration that permits direct connections to route messages through a peer.

The networking protocol can and will be implemented in multiple underlying protocols. Since the protocol is encrypted, a secure underlying connection with TLS or HTTPS is never necessary. WebSockets are prioritized since to make purely in-browser Kinodes a possibility. The other transport protocols with slots in the onchain identity data structure are: TCP, UDP, and WebTransport.

Currently, only WebSockets and TCP are implemented in the runtime. As part of the protocol, nodes identify the supported transport protocols of their counterparty and choose the optimal one to use. Even nodes that do not share common transport protocols may communicate via routers. Direct nodes must have at least one transport protocol in common. It is strongly recommended that all nodes support WebSockets, including future browser-based nodes and mobile-nodes.

2. Onchain Networking Information

All nodes must publish an Ed25519 EdDSA networking public key onchain using the protocol registry contract. A new key transaction may be posted at any time, but because agreement on networking keys is required to establish a connection and send messages between nodes, changes to onchain networking information will temporarily disrupt networking. Therefore, all nodes must have robust access to the onchain PKI, meaning: multiple backup options and multiple pathways to read onchain data. Because it may take time for a new networking key to proliferate to all nodes, (anywhere from seconds to days depending on chain indexing access) a node that changes its networking key should expect downtime immediately after doing so.

Nodes that wish to make direct connections must post an IP and port onchain.

This is done by publishing note keys in kimap.

In particular, the networking protocol expects the following pattern of data available:

- A

~net-keynote AND - Either:

a. A

~routersnote OR b. An~ipnote AND at least one of:~tcp-portnote~udp-portnote~ws-portnote~wt-portnote

Nodes with onchain networking information (an IP address and at least one port) are referred to as direct nodes, and ones without are referred to as indirect or routed nodes.

If a node is indirect, it must initiate a connection with at least one of its allowed routers in order to begin networking. Until such a connection is successfully established, the indirect node is offline. In practice, an indirect node that wants reliable access to the network should (1) have many routers listed onchain and (2) connect to as many of them as possible on startup. In order to acquire such routers in practice, a node will likely need to provide some payment or service to them.

3. Protocol Selection

When one node seeks to send a message to another node, it first checks to see if it has an existing route to send it on. If it does, that route is used. If not, the node will use the information available about the other node to try and establish a route.

If the target node is direct, the route may be direct, using one of the available transport methods. If a direct node presents multiple ports using notes in kimap, the priority is currently:

- TCP

- WS

As more protocols are supported by various runtimes, this priority list will expand.

Once a transport method is selected, if the connection fails, the target will be considered offline. A node does not need to try every route available: if a direct node presents a port, it must service connections on that method to be considered online.

If the target node is indirect, the route must be established through one of their routers. As many routers as can be attempted within the message's timeout may be tried. The selection of which routers to try in what order is implementation-specific. When a router is being attempted, the transport method will be determined as in a standard direct connection, described above. If a router is offline, the next router is tried. If no routers are online, the indirect node will be considered offline.

4. WebSockets Protocol

This protocol does not make use of any WebSocket frames other than Binary, Ping, and Pong.

Pings should be responded to with a Pong.

These are only used to keep the connection alive.

All content is sent as Binary frames.

Binary frames in the current protocol version (1) are limited to 10MB. This includes the full serialized KernelMessage.

All data structures are serialized and deserialized using MessagePack.

4.1. Establishing a Connection

The WebSockets protocol uses the Noise Protocol Framework to encrypt all messages end-to-end.

The parameters used are Noise_XX_25519_ChaChaPoly_BLAKE2s.

Using the XX pattern means following this interactive pattern:

-> e

<- e, ee, s, es

-> s, se

The initiator is the node that is trying to establish a connection.

If the target is direct, the intiator uses the IP and port provided onchain to establish a WebSocket connection. If the connection fails, the target is considered offline.

If the target is indirect, the initiator uses the IP and port of one of the target's routers to establish a WebSocket connection. If a given router is unreachable, or fails to comport to the protocol, others should be tried until they are exhausted or too much time has passed (subject to the specific implementation). If this process fails, the target is considered offline.

If the target is indirect, before beginning the XX handshake pattern, the initiator sends a RoutingRequest to the target.

#![allow(unused)] fn main() { pub struct RoutingRequest { pub protocol_version: u8, pub source: String, pub signature: Vec<u8>, pub target: String, } }

The protocol_version is the current protocol version, which is 1.

The source is the initiator's node ID, as provided onchain.

The signature must be created by the initiator's networking public key.

The content is the routing target's node ID (i.e., the node which the initiator would like to establish an e2e encrypted connection with) concatenated with the router's node ID (i.e., the node which the initiator is sending the RoutingRequest to, which will serve as a router for the connection if it accepts).

The target is the routing target's node ID that must be signed above.

Once a connection is established, the initiator sends an e message, containing an empty payload.

The target responds with the e, ee, s, es pattern, including a HandshakePayload serialized with MessagePack.

#![allow(unused)] fn main() { struct HandshakePayload { pub protocol_version: u8, pub name: String, pub signature: Vec<u8, Global>, pub proxy_request: bool, } }

The current protocol_version is 1.

The name is the name of the node, as provided onchain.

The signature must be created by the node's networking public key, visible onchain.

The content is the public key they will use to encrypt messages on this connection.

How often this key changes is implementation-specific but should be frequent.

The proxy_request is a boolean indicating whether the initiator is asking for routing service to another node.

As the target, or receiver of the new connection, proxy_request will always be false. This field is only used by the initiator.

Finally, the initiator responds with the s, se pattern, including a HandshakePayload of their own.

After this pattern is complete, the connection switches to transport mode and can be used to send and receive messages.

4.2. Sending Messages

Every message sent over the connection is a KernelMessage, serialized with MessagePack, then encrypted using the keys exchanged in the Noise protocol XX pattern, sent in a single Binary WebSockets message.

#![allow(unused)] fn main() { struct KernelMessage { pub id: u64, pub source: Address, pub target: Address, pub rsvp: Rsvp, pub message: Message, pub lazy_load_blob: Option<LazyLoadBlob> } }

See Address, Rsvp, Message,and LazyLoadBlob data types.

4.3. Receiving Messages

When listening for messages, the protocol may ignore messages other than Binary, but should also respond to Ping messages with Pongs.

When a Binary message is received, it should first be decrypted using the keys exchanged in the handshake exchange, then deserialized as a KernelMessage.

If this fails, the message should be ignored and the connection must be closed.

Successfully decrypted and deserialized messages should have their source field checked for the correct node ID and then passed to the kernel.

4.4. Closing a Connection

A connection can be intentionally closed by any party, at any time. Other causes of connection closure are discussed in this section.

All connection errors must result in closing a connection.

Failure to send a message must be treated as a connection error.

Failure to decrypt or deserialize a message must be treated as a connection error.

If a KernelMessage's source is not the node ID which the message recipient is expecting, it must be treated as a connection error.